Validation & Verification of AI

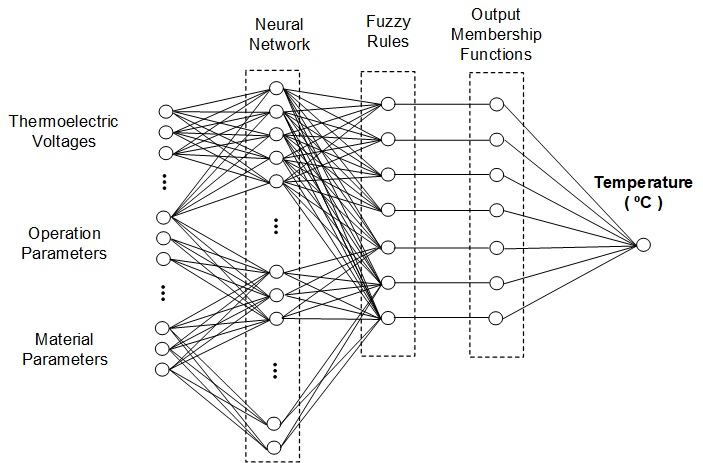

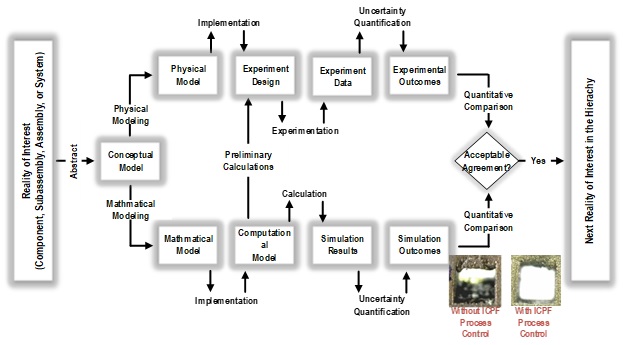

Artificial intelligence (AI) technologies such as neural networks, fuzzy logic, and genetic algorithms are needed for the control, surveillance, diagnostics, monitoring, and operation of weapon systems and nuclear power plants, but this requires verification and validation (V&V) of nonprocedural software. Development and V&V of scenario-based code become increasingly difficult as the system becomes more complex because of the combinatorial explosion of the number of situations to be handled and the certainty that some situations will be unforeseen. Digital systems have caused costly delays in bringing new systems online because of unexpected V&V problems, even though those systems were not completely software-based. No explicit methods exist today to ensure the reliability of intelligent software. Conventional scenario-based procedural testing, analytic V&V, and model checking cannot be applied to AI paradigms to ensure early error detection. Realizing the promise of AI systems will require a new concept of V&V to resolve the difficulties since the conventional V&V by theory-based, analytic, and independently testable models is suitable only for procedural software.

Nonparametric approach

Combined with nonparametric statistical techniques, our framework will assess figures of merit for the early detection of faulty behavior in AI systems. The framework provides the capability of AI to generalize, nonparametric n-fold cross-validatory resampling to automate the detection of wrong behavior and quantification in AI systems. This yields a confidence measure for verification and allows early model fault detection for validation. The confidence measure will eliminate an AI system’s “black box” problem, which is critical for many applications.

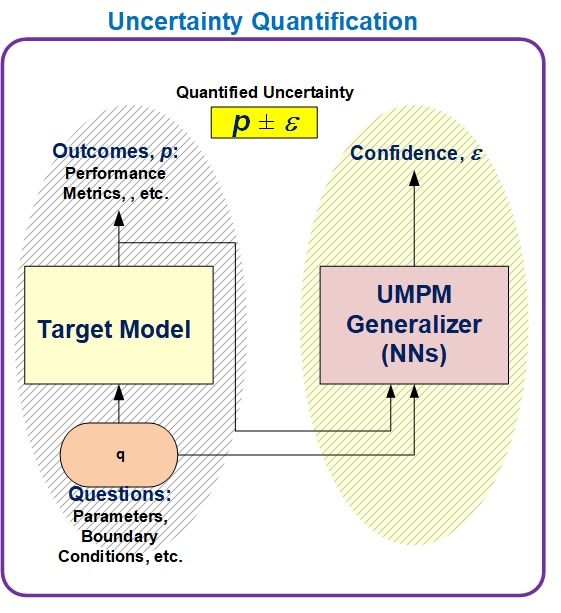

Uncertainty Quantification

Our approach addresses uncertainty in a target model (or algorithm) comprehensively: i.e., uncertainty quantification (UQ) for the model without interfering with a target process. We quantitatively obtain new information on how the target model behaves in terms of overall errors (including propagated and model errors) when the model is faced with different (or novel) parameters, boundary conditions, constraints, etc.

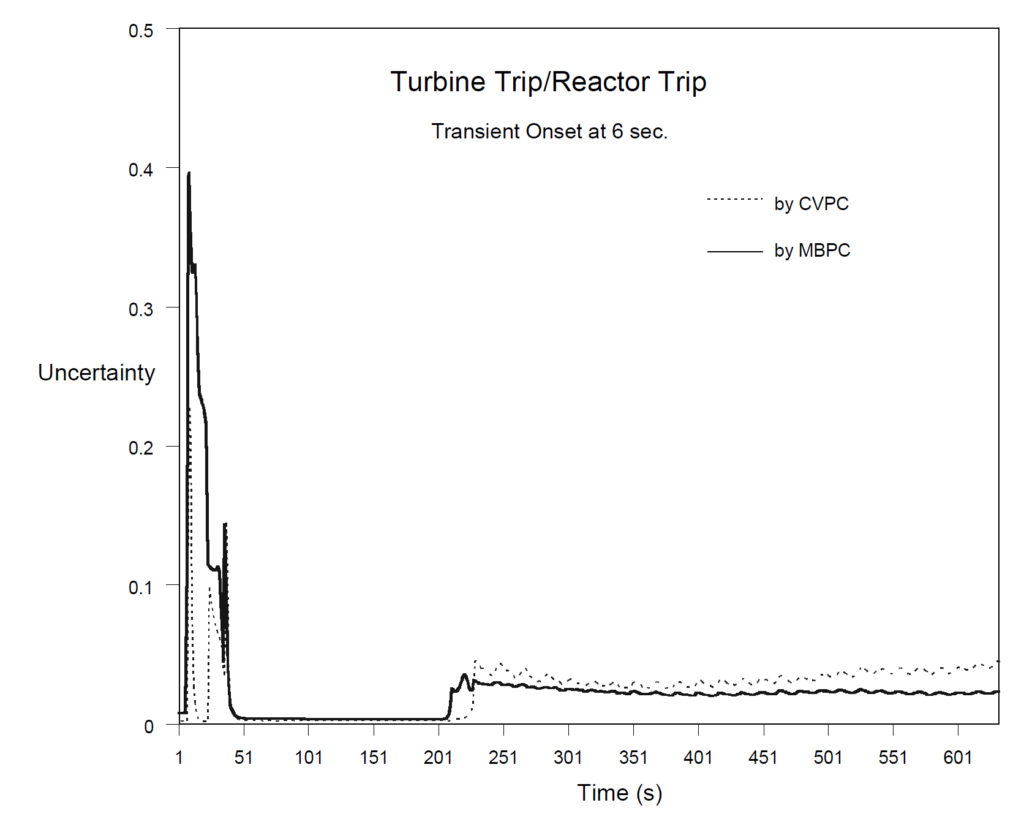

Referring to Figure next, note that when an error bound (uncertainty) falls below 0.1, the AI diagnosis for a nuclear accident is considered accurate with high confidence. This error-combined classification can be provided as a final result to the power plant operators or technical support staff at each instant of input data presentation.